Frequently Asked Questions¶

1 What to do if you encounter issues during initial installation and need to clean up and reinstall!¶

Note: This is only applicable for scenarios where issues occur during the initial installation, requiring a complete removal before reinstallation. Carefully confirm before executing the following cleanup steps!

If installation issues occur and a full reinstallation is required, you need to clean up the following three areas to start the reinstallation from LauncherGuance:

1.1 Clean up installed Guance application services¶

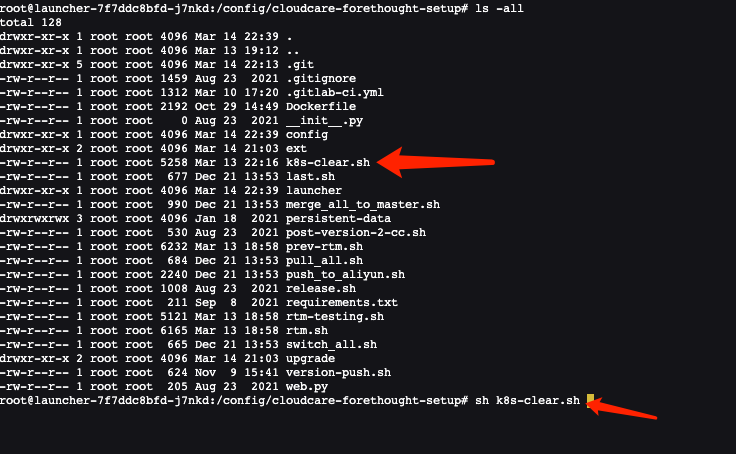

To clean up various Guance application services already installed in Kubernetes, access the Launcher container on the operations machine and execute the built-in cleanup script provided by Launcher:

launcher-xxxxxxxx-xxx represents your launcher service pod name! After entering the container, you can see the k8s-clear.sh script (starting from version 1.47.103, this script is located in the /config/tools directory) provided by the Launcher service. Execute this script to clean up all Guance application services and Kubernetes resources:1.2 Clean up automatically created databases in MySQL¶

You can enter the Launcher container. The Launcher container comes with the mysql client tool. Use the following command to connect to the Guance MySQL instance:

You need to connect using the mysql administrator account. After connecting, execute the following six MySQL database and user cleanup commands:drop database df_core;

drop user df_core;

drop database df_message_desk;

drop user df_message_desk;

drop database df_func;

drop user df_func;

drop database df_dialtesting;

drop user df_dialtesting;

1.3 Clean up automatically created users in InfluxDB¶

If InfluxDB is used as the time-series engine, use the influx client tool to connect to InfluxDB and execute the following two user cleanup commands:

2 Deployment Notes¶

2.1 After deployment, can I manually modify Kubernetes resources automatically generated by the installer?¶

No manual modification allowed, because when a new version is released later, using Launcher to upgrade the installation will regenerate Deployment, Service, Ingress, etc., based on the configuration information filled out during installation (Configmap is an exception; its content can be modified manually, but arbitrary modifications may cause program runtime errors).

3 Standalone Container Rancher Server Certificate Update¶

3.1 How to handle if the certificate has not expired¶

The rancher server can run normally. Upgrading to Rancher v2.0.14+, v2.1.9+, or v2.2.2+ will automatically check the certificate expiration date. If it detects that the certificate is about to expire, it will automatically generate a new certificate. Therefore, for standalone container running Rancher Server, just upgrade the rancher version to one that supports automatic SSL certificate updates before the certificate expires, no other action is needed.

3.2 How to handle if the certificate has expired¶

The rancher server cannot run normally. Even upgrading to Rancher v2.0.14+, v2.1.9+, or v2.2.2+ may prompt certificate errors. If this situation occurs, perform the following actions:

-

Upgrade the rancher version to v2.0.14+, v2.1.9+, or v2.2.2+ normally;

-

Execute the following commands:

-

For versions 2.0 or 2.1

docker exec -ti <rancher_server_id>mv /var/lib/rancher/management-state/certs/bundle.json /var/lib/rancher/management-state/certs/bundle.json-bak

- For version 2.2+

docker exec -ti <rancher_server_id>mv /var/lib/rancher/management-state/tls/localhost.crt /var/lib/rancher/management-state/tls/localhost.crt-bak

- For version 2.3+

docker exec -ti <rancher_server_id>mv /var/lib/rancher/k3s/server/tls /var/lib/rancher/k3s/server/tlsbak

# Execute twice, the first time to request a certificate, the second to load the certificate and start

docker restart <rancher_server_id>

- For version 2.4+

a. exec into rancher server

kubectl --insecure-skip-tls-verify -n kube-system delete secrets k3s-serving

kubectl --insecure-skip-tls-verify delete secret serving-cert -n cattle-system

rm -f /var/lib/rancher/k3s/server/tls/dynamic-cert.json

b. Restart the rancher-server

c. Execute the following command to refresh parameters

- Restart the Rancher Server container

4 Rancher server certificate expired causing inability to manage k8s clusters¶

If the cluster certificate has expired, even upgrading to Rancher v2.0.14, v2.1.9, or higher versions will not allow certificate rotation. Rancher updates certificates through Agents, and if the certificate has expired, communication with the Agent will not be possible.

4.1 Solution¶

Manually set the node time, adjusting it forward slightly. Since the Agent only communicates with the K8S master and Rancher Server, if the Rancher Server certificate has not expired, just adjust the K8S master node time. Adjustment commands:

# Disable ntp synchronization, otherwise the time will update automatically

timedatectl set-ntp false

# Modify node time

timedatectl set-time '2019-01-01 00:00:00'

Then upgrade the Rancher Server until the certificate rotation is completed, then synchronize the time back.

Check certificate validity period5 Why can't I see DataWay in the front end after creating it¶

5.1 Common Cause Analysis¶

- The Dataway service deployed on the server is not running properly.

- The Dataway service configuration file is incorrect, not configured with the correct listening or workspace token information.

- The Dataway service runtime configuration is wrong, which can be specifically identified by checking the dataway logs.

- The server deploying Dataway cannot communicate with the kodo service. (Including the server not having added the correct resolution of the df-kodo service in hosts)

- The kodo service is abnormal, which can be confirmed by checking the kodo service logs.

- The df-kodo ingress service is not configured correctly. Specifically manifested as unable to access

http|https://df-kodo.<xxxx>:<port>

6 Why can't I use dial testing service¶

6.1 Root Cause Analysis¶

- The deployed Guance application is in an offline environment, and the physical node network environment cannot go online. (More common)

- Self-built probe node network anomaly.

- Regional provider network anomaly.

- Dial test task creation error.

7 Common Deployment Issues and Solutions¶

7.1 describe pods reports unbound immediate PersistentVolumeClaims error¶

- Check pvc

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

default opensearch-single-opensearch-single-0 Bound pvc-0da2cb6f-1cb9-4630-b0ab-512ce57743a8 16Gi RWO openebs-hostpath 19d

launcher persistent-data Pending df-nfs-storage 6m3s

middleware data-es-cluster-0 Bound pvc-36e48f5a-37b3-4c28-ad14-059265ee3009 50Gi RWO openebs-hostpath 18d

It was found that the status of persistent-data is Pending.

- Check nfs-subdir-external-provisioner container status

kubectl get pods -n kube-system | grep nfs-subdir-external-provisioner

nfs-provisioner-nfs-subdir-external-provisioner-58b7cdf6f5dr5vr 0/1 ContainerCreating 0 7h7m

- Check

nfs-provisioner-nfs-subdir-external-provisioner-58b7cdf6f5dr5vrinformation

kubectl describe -n kube-system pods nfs-provisioner-nfs-subdir-external-provisioner-58b7cdf6f5dr5vr

....

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedMount 30m (x49 over 6h53m) kubelet Unable to attach or mount volumes: unmounted volumes=[nfs-subdir-external-provisioner-root], unattached volumes=[kube-api-access-5p4qn nfs-subdir-external-provisioner-root]: timed out waiting for the condition

Warning FailedMount 5m27s (x136 over 7h4m) kubelet Unable to attach or mount volumes: unmounted volumes=[nfs-subdir-external-provisioner-root], unattached volumes=[nfs-subdir-external-provisioner-root kube-api-access-5p4qn]: timed out waiting for the condition

Warning FailedMount 74s (x217 over 7h6m) kubelet MountVolume.SetUp failed for volume "nfs-subdir-external-provisioner-root" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 10.200.14.112:/nfsdata /var/lib/kubelet/pods/3970ff5f-5dbf-419e-a6af-3080508d2524/volumes/kubernetes.io~nfs/nfs-subdir-external-provisioner-root

Output: mount: wrong fs type, bad option, bad superblock on 10.200.14.112:/nfsdata,

missing codepage or helper program, or other error

(for several filesystems (e.g. nfs, cifs) you might

need a /sbin/mount.<type> helper program)

In some cases useful info is found in syslog - try

dmesg | tail or so.

The reason for wrong fs type, bad option is that nfs-utils is not installed

- Install

nfs-utils

Execute the following command on the host: