DataKit Pipeline Offload¶

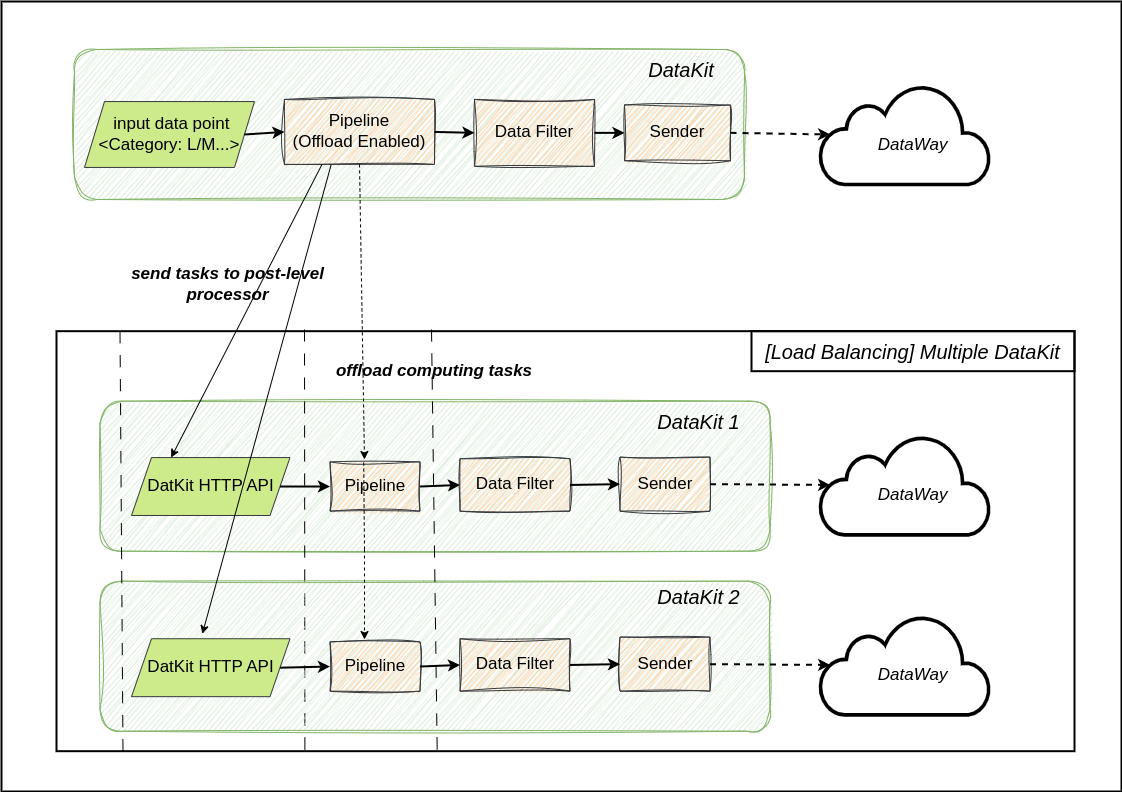

You can use DataKit's Pipeline Offload function to reduce high data latency and high host load caused by data processing.

Configuration Method¶

It needs to be configured and enabled in the datakit.conf main configuration file. See below for the configuration. Currently supported targets receiver are datakit-http and ploffload, which allows multiple DataKit addresses to be configured to achieve load balancing.

Notice:

- Currently only supports unloading logging (

Logging) category data processing tasks; - The address of the current

DataKitcannot be filled in theaddressesconfiguration item, otherwise a loop will be formed, causing the data to always be in the currentDataKit; - Please make the

DataWayconfiguration of the targetDataKitconsistent with the currentDataKit, otherwise the data recipient sends to itsDataWayaddress; - If

receiveris configured asploffload, the DataKit on the receiving end needs to have theploffloadcollector enabled.

Please check whether the target network address is locally accessible. The target cannot be reached if it is listening on the loopback address.

Reference configuration:

[pipeline]

# Offload data processing tasks to post-level data processors.

[pipeline.offload]

receiver = "datakit-http"

addresses = [

# "http://<ip>:<port>"

]

If the receiving end DataKit turns on the ploffload collector, it can be configured as:

[pipeline]

# Offload data processing tasks to post-level data processors.

[pipeline.offload]

receiver = "ploffload"

addresses = [

# "http://<ip>:<port>"

]

Working Principle¶

After DataKit finds the Pipeline data processing script, it will judge whether it is a remote script from Observation Cloud, and if so, forward the data to the post-level data processor for processing (such as DataKit). The load balancing method is round robin.

Deploy post-level data processor¶

There are several ways to deploy the data processor (DataKit) for receiving computing tasks:

- host deployment

DataKit dedicated to data processing is not currently supported; host deployment DataKit see documentation

- container deployment

The environment variables ENV_DATAWAY and ENV_HTTP_LISTEN need to be set, and the DataWay address needs to be consistent with the DataKit configured with the Pipeline Offload function; it is recommended to map the listening port of the DataKit running in the container to the host.

Reference command: