Offline Environment Deployment Manual¶

1 Introduction¶

1.1 Document Overview¶

This document primarily describes offline deployments (including but not limited to physical servers, IDC server rooms), introducing the complete steps from resource planning and configuration to deploying Guance and its operation.

Note:

- This document uses dataflux.cn as the primary domain example. Replace it with the corresponding domain during actual deployment.

1.2 Key Terms¶

| Term | Description |

|---|---|

| Launcher | A WEB application used for deploying and installing Guance. Follow the steps guided by the Launcher service to complete the installation and upgrade of Guance. |

| Operations Machine | A machine with kubectl installed, in the same network as the target Kubernetes cluster. |

| Deployment Machine | A machine that accesses the launcher service via a browser to complete the guidance, installation, and debugging of Guance. |

| kubectl | The command-line client tool for Kubernetes, installed on the operations machine. |

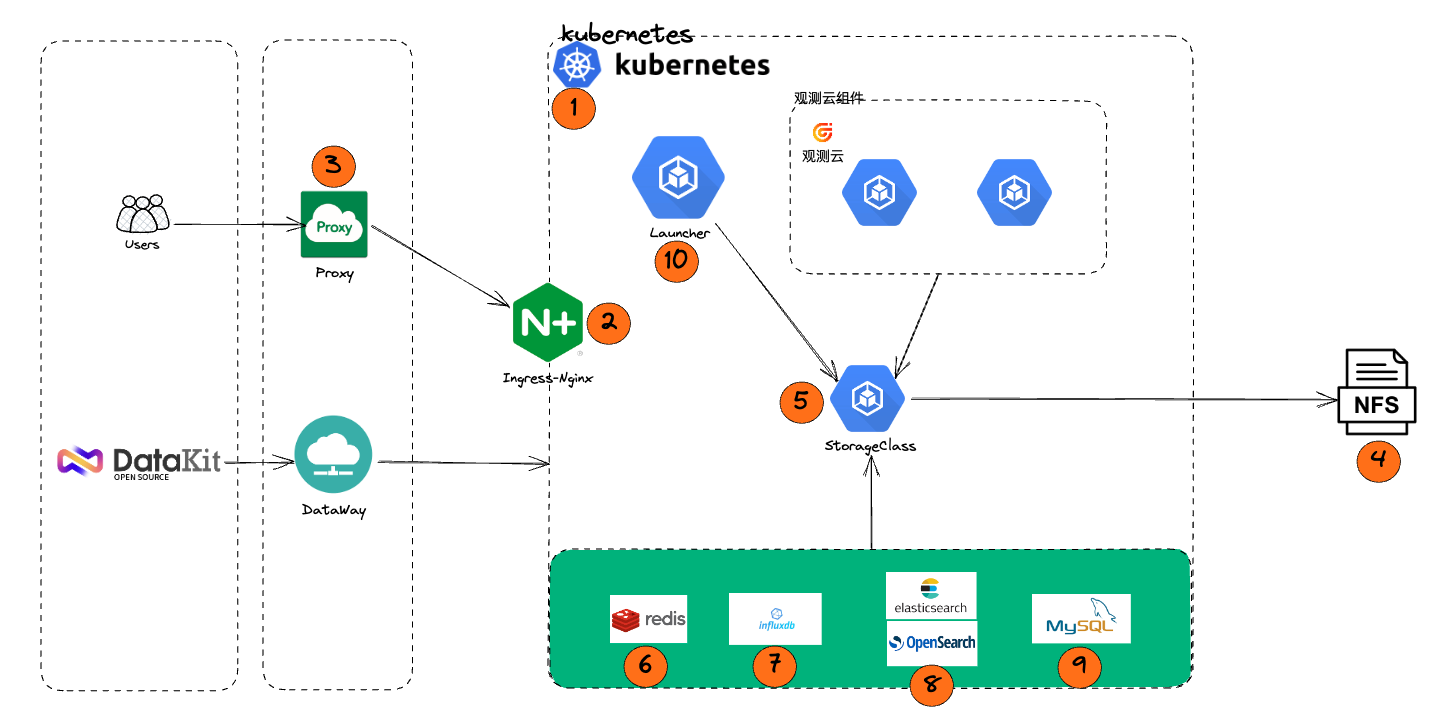

1.3 Deployment Steps Framework¶

2 Resource Preparation¶

Offline Environment Resource List

3 Infrastructure Deployment¶

3.1 Step One Create Kubernetes Cluster¶

3.2 Step Two Kubernetes Ingress Component¶

Kubernetes Ingress Component Deployment

3.3 Step Three Proxy Deployment¶

3.4 Step Four NFS Deployment¶

3.5 Step Five Kubernetes Storage Component¶

Kubernetes Storage Component Deployment

3.6 Step Six Redis Deployment¶

3.7 Step Seven GuanceDB for Metrics Deployment¶

3.8 Step Eight Log Engine Deployment¶

3.9 Step Nine MySQL Deployment¶

4 kubectl Installation and Configuration¶

4.1 Install kubectl¶

kubectl is a command-line client tool for Kubernetes. You can use this command-line tool to deploy applications, check, and manage cluster resources. Our Launcher is based on this command-line tool to deploy applications. For specific installation methods, refer to the official documentation:

https://kubernetes.io/docs/tasks/tools/install-kubectl/

4.2 Configure kube config¶

To gain the ability to manage clusters, kubectl requires the cluster's kubeconfig file. After deploying the cluster using kubeadm, the default kubeconfig file is located at /etc/kubernetes/admin.conf. The content of this file must be written into the client user path $HOME/.kube/config file.

5 Start Installing Guance¶

After completing the operations, you can refer to the manual Start Installation