Alibaba Cloud Deployment Manual¶

1 Introduction¶

1.1 Document Description¶

This document mainly focuses on deployment on Alibaba Cloud, introducing the complete steps from resource planning and configuration to deploying Guance and running it.

Note:

- This document uses dataflux.cn as the main domain name example. Replace it with the corresponding domain name during actual deployment.

1.2 Keywords¶

| Term | Description |

|---|---|

| Launcher | A WEB application used for deploying and installing Guance. Follow the guided steps of the Launcher service to complete the installation and upgrade of Guance. |

| Operations Machine | A machine with kubectl installed, in the same network as the target Kubernetes cluster. |

| Installation Machine | A machine that accesses the launcher service via a browser to complete the guided installation of Guance. |

| kubectl | The command-line client tool for Kubernetes, installed on the operations machine. |

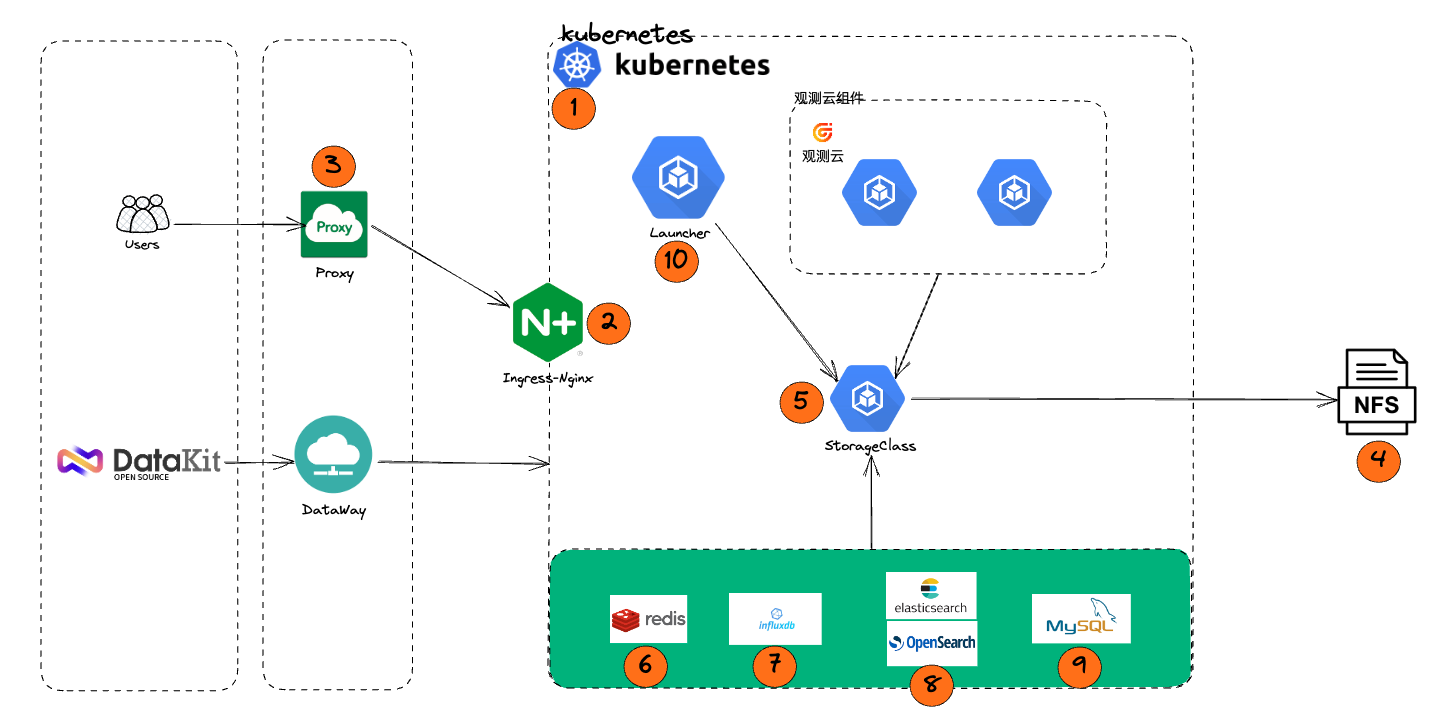

1.3 Deployment Steps Architecture¶

2 Resource Preparation¶

3 Infrastructure Deployment¶

3.1 Deployment Description¶

RDS, InfluxDB, OpenSearch, NAS Storage are created according to configuration requirements, all within the same region under the same VPC network. ECS, SLB, NAT Gateway are automatically created by ACK, no separate creation is needed, meaning steps 1, 2, and 3 in the deployment diagram do not need to be created separately.

3.2 Steps One, Two, Three - ACK Service Creation¶

3.2.1 Cluster Configuration¶

Enter the Container Service Kubernetes Edition, create a Kubernetes cluster, select the Standard Managed Cluster Edition. Pay attention to the following cluster configuration:

- Must be in the same region as previously created resources such as RDS, InfluxDB, Elasticsearch.

- Check the "Configure SNAT" option (ACK automatically creates and configures the NAT gateway to enable outbound internet access for the cluster).

- Check the "Public Access" option (to access the cluster API from the public network. If this cluster is maintained internally, this option can be unchecked).

- When enabling the ACK service, temporarily choose flexvolume as the storage driver; CSI drivers are not supported in this document.

3.2.2 Worker Configuration¶

Mainly select ECS specifications and quantity. Specifications can be created according to the configuration list requirements or assessed based on actual conditions, but must not fall below the minimum configuration requirements. Quantity should be at least 3 or more.

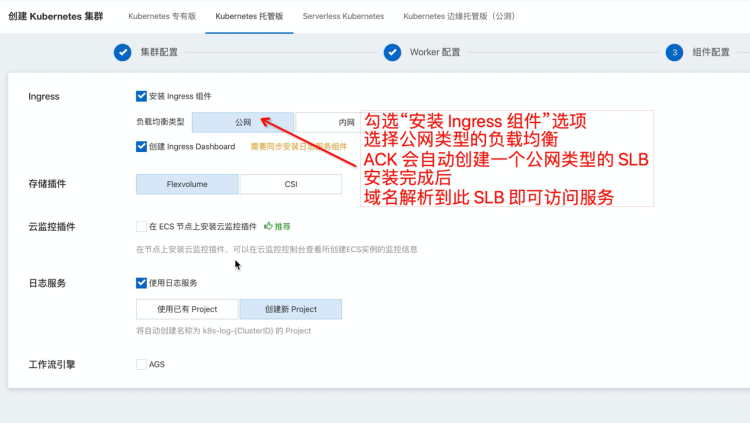

3.2.3 Component Configuration¶

Component configuration must check the "Install Ingress Component" option, select the "Public Network" type. ACK will automatically create a public SLB. After installation, map the domain name to the public IP of this SLB.

3.3 Steps Four, Five - Dynamic Storage Configuration¶

Need to create the NAS file system in advance and obtain the nas_server_url.

3.3.1 Dynamic Storage Installation¶

Alibaba Cloud Container Service ACK's container storage functionality is based on Kubernetes Container Storage Interface (CSI), deeply integrated with Alibaba Cloud storage services such as EBS cloud disks, NAS and CPFS file storage, OSS object storage, and local disks, fully compatible with native Kubernetes storage services like EmptyDir, HostPath, Secret, ConfigMap, etc. This article introduces an overview of ACK storage CSI, features supported by ACK storage CSI, CSI usage authorization, and CSI usage restrictions. The console will default install the CSI-Plugin and CSI-Provisioner components.

- Verify Plugin

- Execute the following command to check if the CSI-Plugin component has been successfully deployed.

- Execute the following command to check if the CSI-Provisioner component has been successfully deployed.

- Create StorageClass

Create and copy the following content into the alicloud-nas-subpath.yaml file.

alicloud-nas-subpath.yaml

Replace {{ nas_server_url }} with the Server URL of the NAS storage created earlier, then execute the command on the operations machine:

When creating clusters with versions of Alibaba Cloud Kubernetes prior to 1.16, if the storage plugin is selected as Flexvolume, the console will default install the Flexvolume and Disk-Controller components, but will not default install the alicloud-nas-controller component.

- Install alicloud-nas-controller component

Download nas_controller.yaml Execute the command on the operations machine:

- Verify Plugin

Execute the following command to check if the Disk-Controller component has been successfully deployed.

- Create StorageClass

Create and copy the following content into the storage_class.yaml file.

storage_class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: alicloud-nas

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

storageclass.kubernetes.io/is-default-class: "true"

mountOptions:

- nolock,tcp,noresvport

- vers=3

parameters:

server: "{{ nas_server_url }}:/k8s/"

driver: flexvolume

provisioner: alicloud/nas

reclaimPolicy: Delete

Replace {{ nas_server_url }} with the Server URL of the NAS storage created earlier, then execute the command on the operations machine:

3.3.2 Verify Deployment¶

3.3.2.1 Create pvc, check status¶

Execute the command to create pvc

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cfs-pvc001

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: alicloud-nas

EOF

3.3.2.2 Check pvc¶

$ kubectl get pvc | grep cfs-pvc001

cfs-pvc001 Bound pvc-a17a0e50-04d2-4ee0-908d-bacd8d53aaa4 1Gi RWO alicloud-nas 3d7h

Boundis the standard for successful deployment

3.4 Step Six - Cache Services¶

- Default internal cache services can be used.

- If not using the default internal cache service, configure Redis as follows:

- Redis Version: 6.0, supporting single-node mode, proxy mode, and master-slave mode Redis clusters.

- Configure Redis password.

- Add the automatically created ECS intranet IP to the Redis whitelist.

3.5 Step Seven - GuanceDB for Metrics¶

3.6 Step Eight - OpenSearch¶

- Create administrator account

- Install Chinese word segmentation plugin

- Add the ECS intranet IP automatically created by ACK to the OpenSearch whitelist

3.7 Step Nine - RDS¶

- Create administrator account (must be an administrator account, which will be used for subsequent installation initialization to create and initialize various application DBs)

- Modify parameter configurations in the console, set innodb_large_prefix to ON (not required for MySQL 8+)

- Add the ECS intranet IP automatically created by ACK to the RDS whitelist

4 kubectl Installation and Configuration¶

4.1 Install kubectl¶

kubectl is a command-line client tool for Kubernetes, through which you can deploy applications, check, and manage cluster resources. Our Launcher is based on this command-line tool to deploy applications. For specific installation methods, refer to the official documentation:

https://kubernetes.io/docs/tasks/tools/install-kubectl/

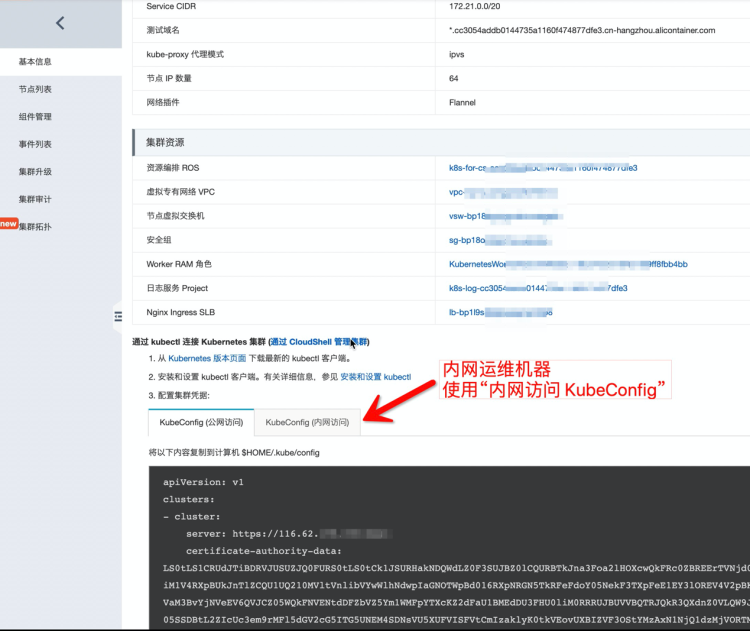

4.2 Configure kube config¶

To gain the ability to manage the cluster, kubectl requires the cluster's kubeconfig content to be placed in the $HOME/.kube/config file. You can view the kubeconfig content in the cluster's Basic Information.

Choose either public or private network kubeconfig depending on whether your operations machine is connected to the cluster's internal network.

5 Start Installation¶

After completing the operations, refer to the manual Start Installation