Log Details¶

By clicking on the log list, you can pull out the details page of the current log to view detailed information about the log, including the time the log was generated, host, source, service, content, extended fields, and multiple items of information for viewing context.

View Complete Logs¶

When logs are reported to Guance, if the data exceeds 1M in size, it will be split according to the 1M standard. For example, a single log of 2.5M will be divided into 3 parts (1M/1M/0.5M). The integrity of the split logs can be checked using the following fields:

Field |

Type | Description |

|---|---|---|

__truncated_id |

string | Indicates the unique identifier of the log; logs that have been split use the same __truncated_id, with an ID prefix of LT_xxx. |

__truncated_count |

number | Indicates the total number of logs that have been split. |

__truncated_number |

number | Indicates the order of the log split, starting from 0, where 0 represents the first log in the sequence. |

On the log details page, if the current log has been split into multiple parts, a View Complete Log button will appear in the upper right corner. Clicking this button will open a new page listing all related logs based on the split order, and the page will highlight the selected log with color to help locate upstream and downstream.

Obsy AI Error Analysis¶

Guance provides the ability to automatically parse error logs. It uses large models to automatically extract key information from logs and combines online search engines and operations knowledge bases to quickly analyze possible causes of failures and provide preliminary solutions.

- Filter all logs with a status of

error; - Click on a single data entry to expand the details page;

- Click the Obsy AI Error Analysis in the upper right corner;

- Start anomaly analysis.

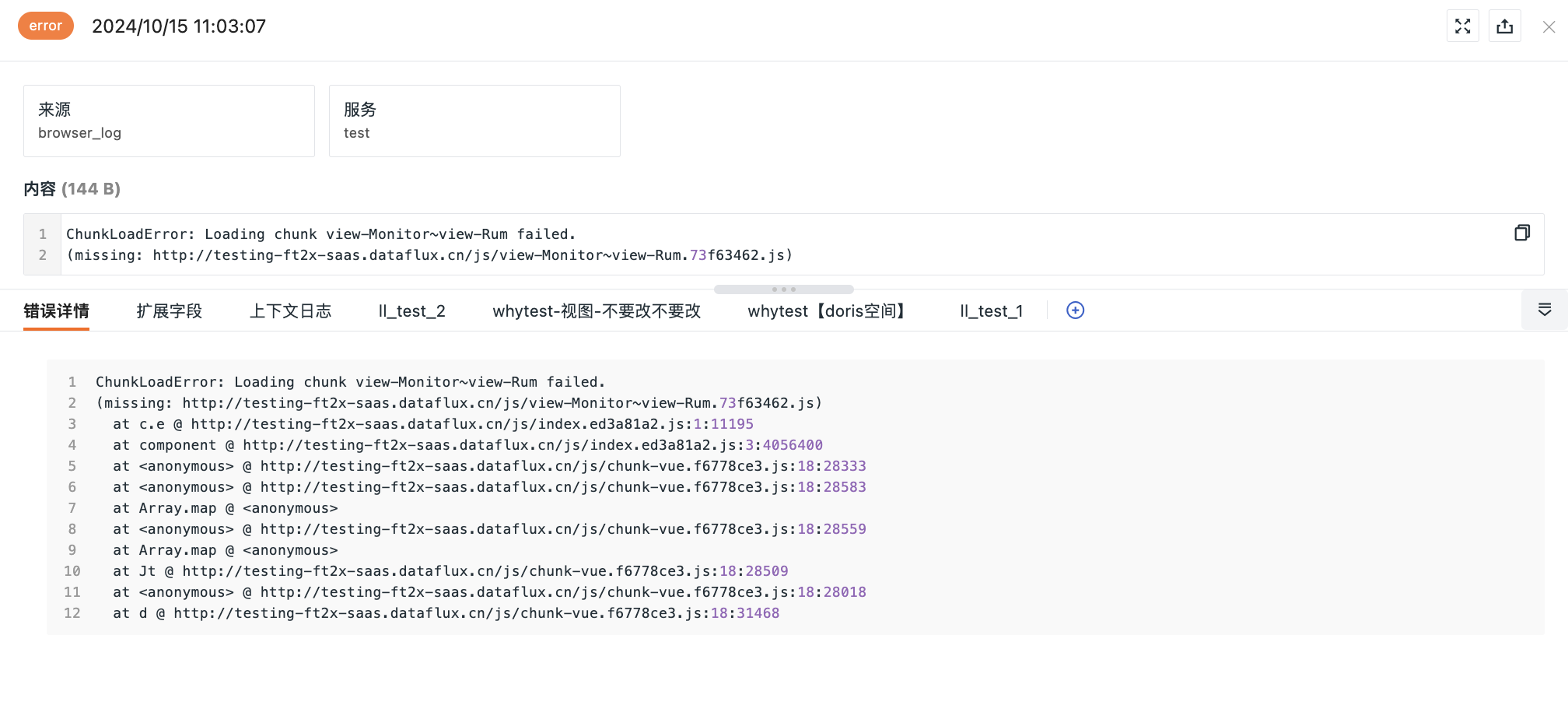

Error Details¶

If the current log contains error_stack or error_message field information, the system will provide you with error details related to this log.

To view more log error information, go to Log Error Tracing.

Attribute Fields¶

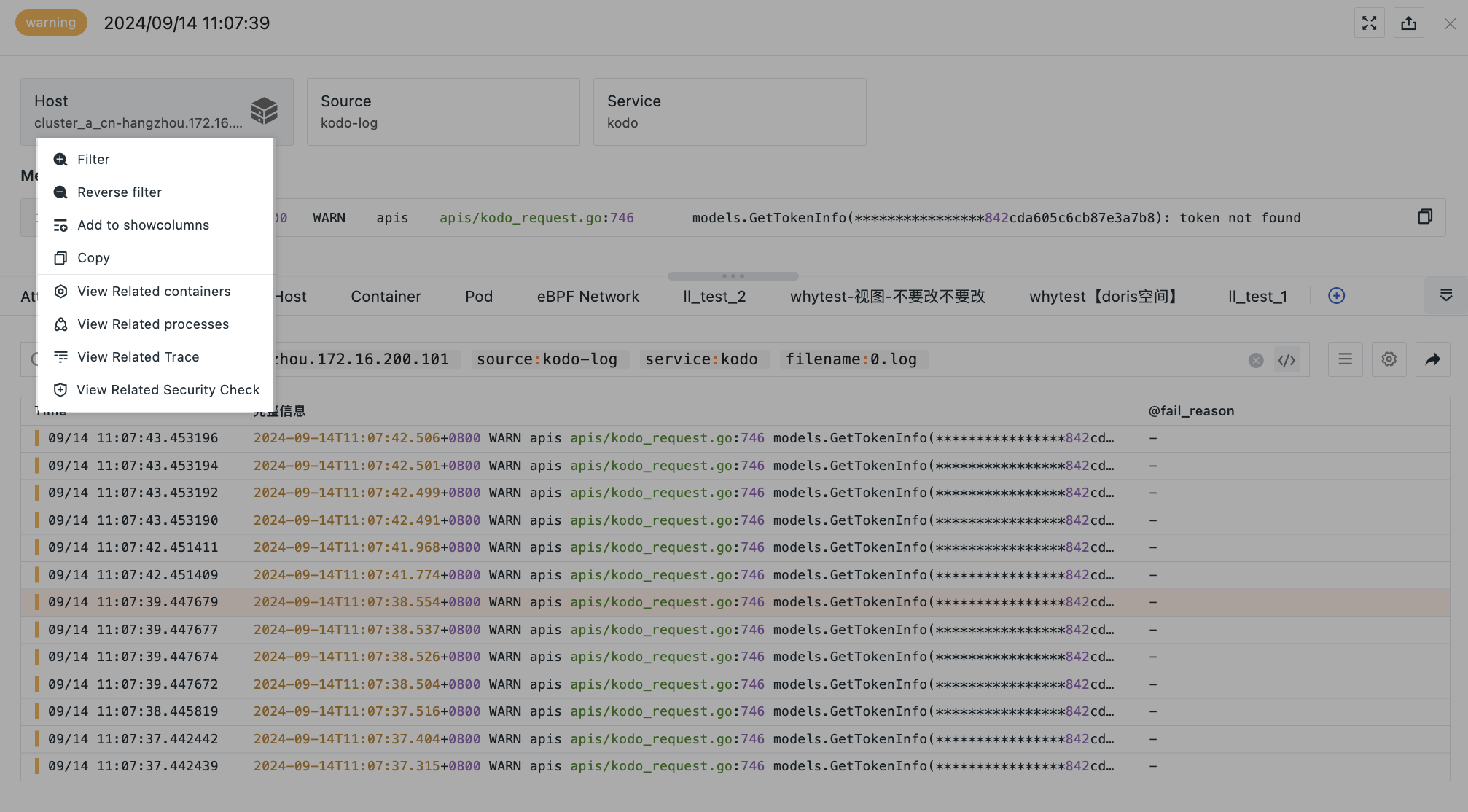

Click attribute fields for quick filtering and viewing, allowing you to see hosts, processes, traces, and container data related to the logs.

| Field | Description |

|---|---|

| Filter Field Value | Add this field to the log explorer to view all log data related to this field. |

| Inverse Filter Field Value | Add this field to the log explorer to view all other relevant log data except for this field. |

| Add to Display Column | Add this field to the explorer list for viewing. |

| Copy | Copy this field to the clipboard. |

| View Related Containers | View all containers related to this host. |

| View Related Processes | View all processes related to this host. |

| View Related Traces | View all traces related to this host. |

| View Related Inspections | View all inspection data related to this host. |

Log Content¶

-

Log content will automatically switch between JSON and text viewing modes based on the

messagetype. If themessagefield does not exist in the log, the log content section will not be displayed. Log content supports expanding and collapsing; by default, it is expanded, and after collapsing, only one line height is shown. -

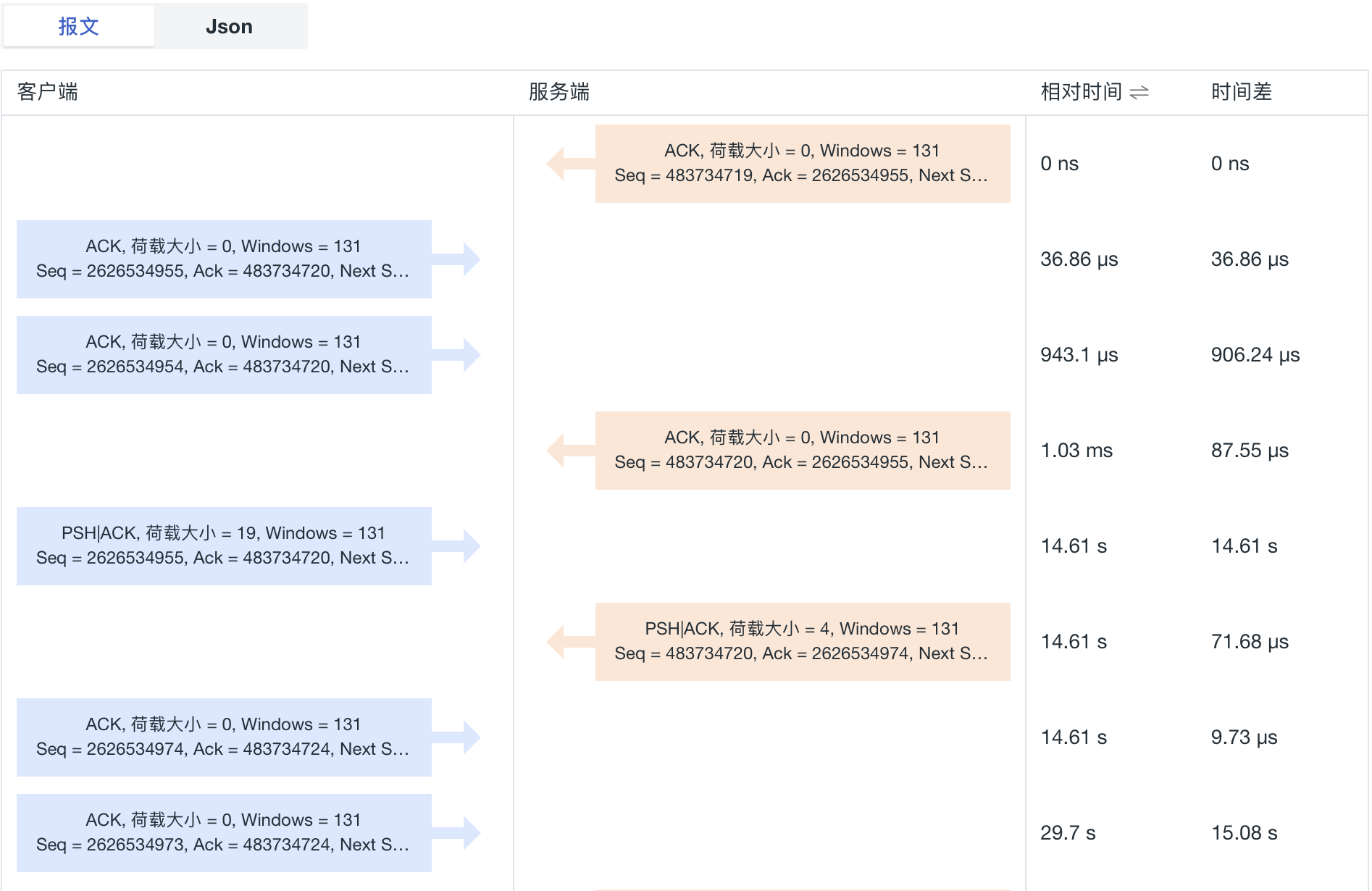

For logs with

source:bpf_net_l4_log, both JSON and packet viewing modes are provided. The packet mode displays client, server, time information, and supports switching between absolute and relative time display. By default, it shows absolute time. The configuration after switching will be saved in the local browser.

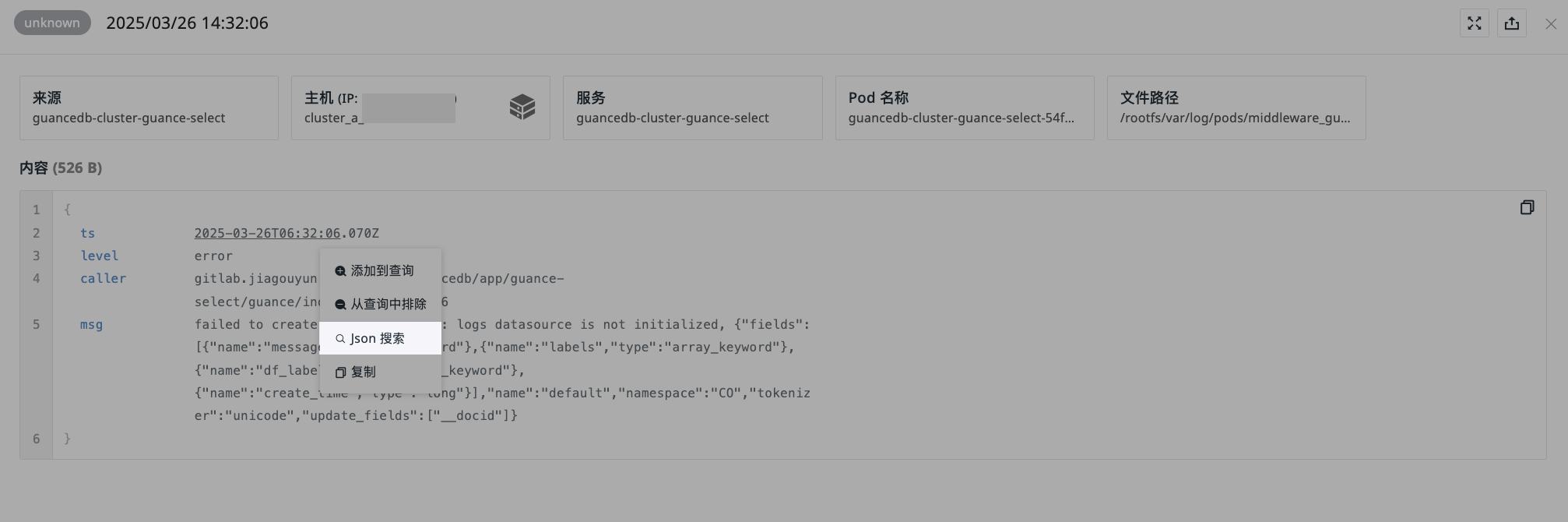

JSON Search¶

In JSON formatted logs, both key and value can be searched via JSON search. After clicking, the explorer's search bar will add the format @key:value for searching.

For multi-level JSON data, use . to indicate hierarchical relationships. For example, @key1.key2:value indicates searching for the value corresponding to key2 under key1.

For more details, refer to JSON Search.

Extended Fields¶

-

You can quickly search and locate by entering field names or values in the search bar;

-

After checking field aliases, you can view them after the field name;

- Hover over an extended field, click the dropdown icon, and choose to perform the following actions:

- Filter field values

- Inverse filter field values

- Add to display column

- Perform dimensional analysis: After clicking, jump to analysis mode > time series chart.

- Copy

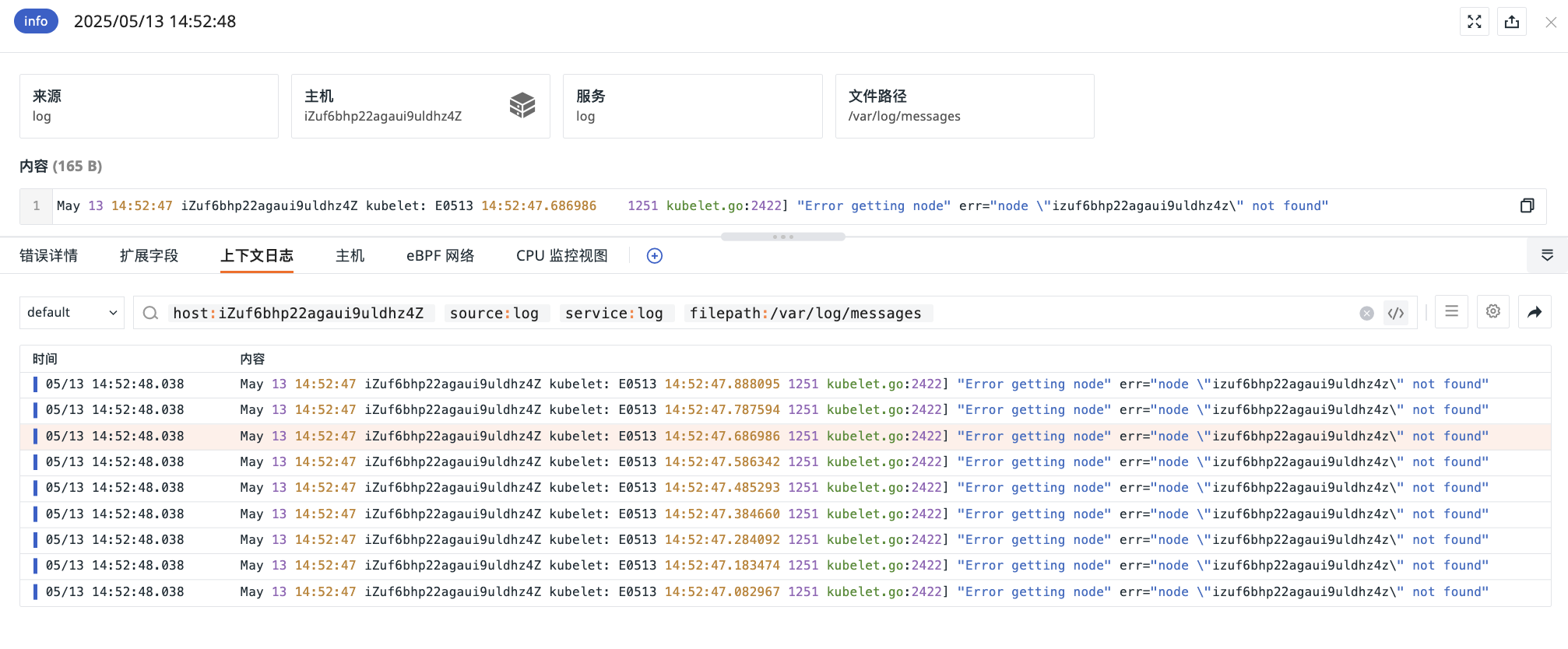

View Context Logs¶

The context query function of the log service helps you trace related records before and after abnormal logs occur through the timeline, allowing you to quickly locate the root cause of problems.

- On the log details page, you can directly view the context logs of this data content;

- You can sort data by clicking the "Time" list;

- The left dropdown box allows you to select indexes to filter out corresponding data;

- Open a new page to view context logs.

Related Logic Explanation

According to the returned data, 50 entries are loaded each time you scroll.

How are the returned data queried?

Prerequisite: Does the log exist with the log_read_lines field? If it exists, follow logic a; if not, follow logic b.

a. Get the log_read_lines value of the current log and apply the filter log_read_lines >= {{log_read_lines.value-30}} and log_read_lines <= {{log_read_lines.value +30}}

DQL Example: Current log line number = 1354170

Then:

L::RE(`.*`):(`message`) { `index` = 'default' and `host` = "ip-172-31-204-89.cn-northwest-1" AND `source` = "kodo-log" AND `service` = "kodo-inner" AND `filename` = "0.log" and `log_read_lines` >= 1354140 and `log_read_lines` <= 1354200} sorder by log_read_lines

b. Get the time of the current log, and calculate the start time and end time by moving forward/backward.

-

Start time: Move back 5 minutes from the current log time;

-

End time: Take the time of the 50th data point after moving forward 50 entries from the current log time. If

time= current log time, then usetime+1 microsecondas the end time; iftime≠ current log time, then usetimeas the end time.

Context Log Details Page¶

Click to jump to the details page. You can manage all current data through the following operations:

- Input text in the search box to search and locate data;

- Click the side button to switch the system's default automatic line break to content overflow mode. At this point, each log is displayed as one line, and you can slide left and right as needed to view.

Correlation Analysis¶

The system supports correlation analysis of log data. Besides error details, extended fields, and context logs, you can comprehensively understand the corresponding HOSTs, CONTAINERS, NETWORKs, etc., associated with the logs.

Built-in Pages¶

For built-in pages such as HOSTs, CONTAINERS, Pods, etc., you can perform the following operations:

- (Using the "HOST" built-in page as an example) *

- Edit the current page display fields; the system will automatically match corresponding data based on the fields;

- Select to jump to the metric view, HOST details page;

- Filter time range.

Note

Only workspace managers can modify the display fields of built-in pages, and it is recommended to configure common fields. If this page is shared by multiple explorers, field modifications will take effect in real-time.

For example: Configuring the "index" field here will allow normal display if the field exists in the logs, but if this field is missing in the APM explorer, the corresponding value will not be displayed.

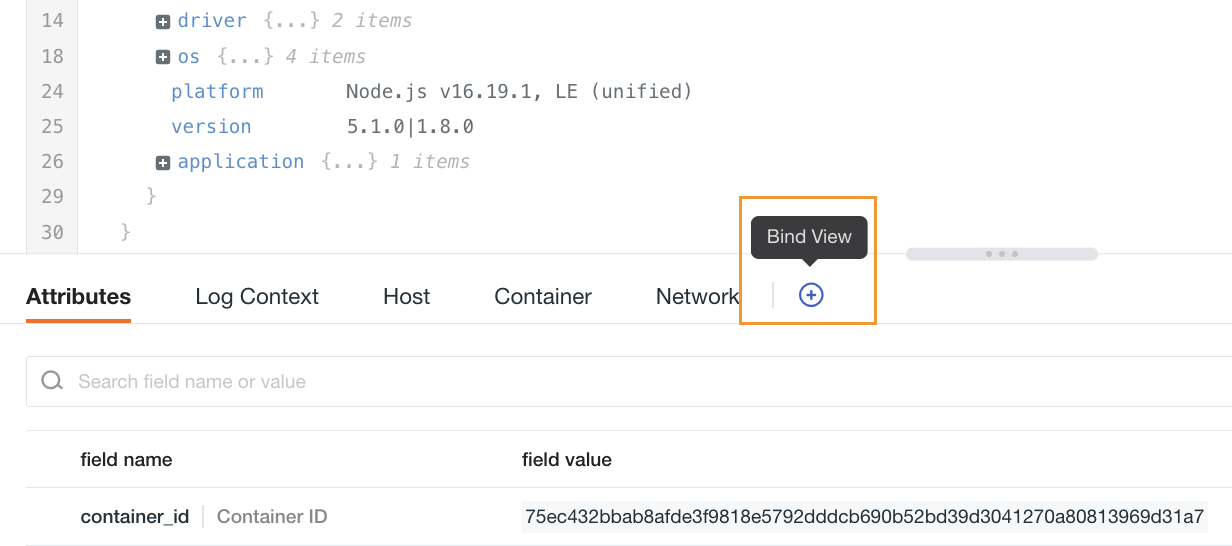

Built-in Views¶

In addition to the default views displayed by the system here, you can also bind user views.

- Enter the built-in view binding page;

- View default associated fields. You can choose to retain or delete fields, and you can also add new

key:valuefields; - Select view;

- After completing the binding, you can view the bound built-in view in the HOST object details. You can click the jump button to go to the corresponding built-in view page.