External Data Sources¶

With DataFlux Func, multiple data sources such as MySQL can be quickly integrated into Guance to achieve seamless data querying and visualization integration.

Features¶

- Native Query: Use native query statements of the data source directly in charts without any additional conversion;

- Data Protection: Considering data security and privacy, all data source information is saved only in your local Func and not stored on the platform to ensure information security and prevent data leakage;

- Custom Management: Easily add and manage various external data sources according to actual needs;

- Real-time Data: Connect directly to external data sources to obtain data in real time, enabling immediate response and decision-making.

Two Integration Paths¶

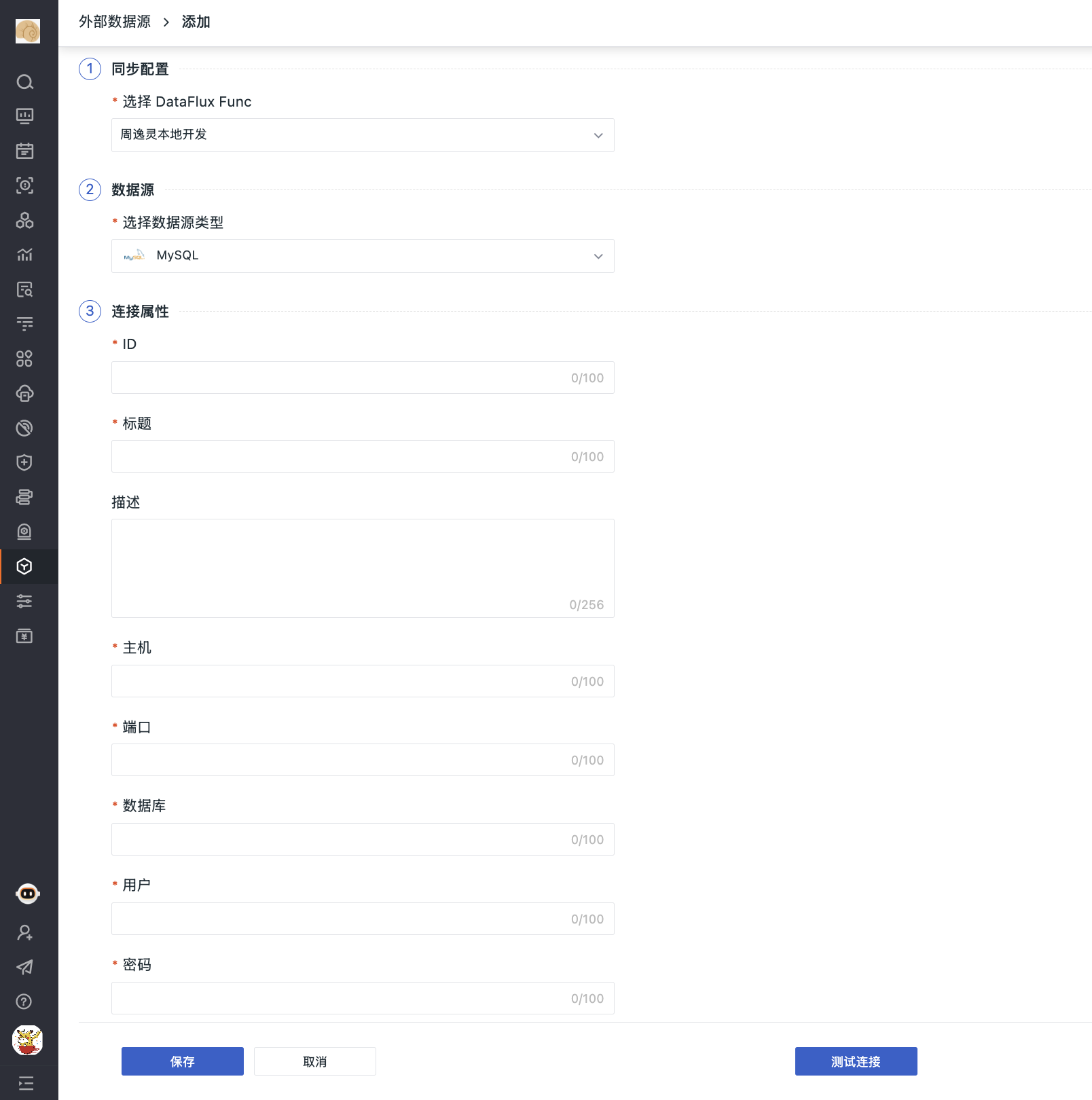

Add Data Source from theGuance Side¶

This means adding or viewing connected DataFlux Func directly under Extensions, further managing all externally connected data sources.

Note

This method is more user-friendly compared to the second path, so we recommend using it.

- Select DataFlux Func from the dropdown;

- Choose a supported data source type;

- Define connection properties, including ID, data source title, associated host, port, database, user, password.

- Test the connection if needed;

- Save.

Use Func to Query External Data Sources¶

Note

The term "external data sources" here has a broad definition, which includes common external storage systems (such as databases like MySQL, Redis, etc.) and third-party systems (e.g.,Guance console).

Prerequisites

You need to download the corresponding installation package and deploy the Func platform by following the Quick Start.

After deployment, wait for initialization to complete, then log in to the platform.

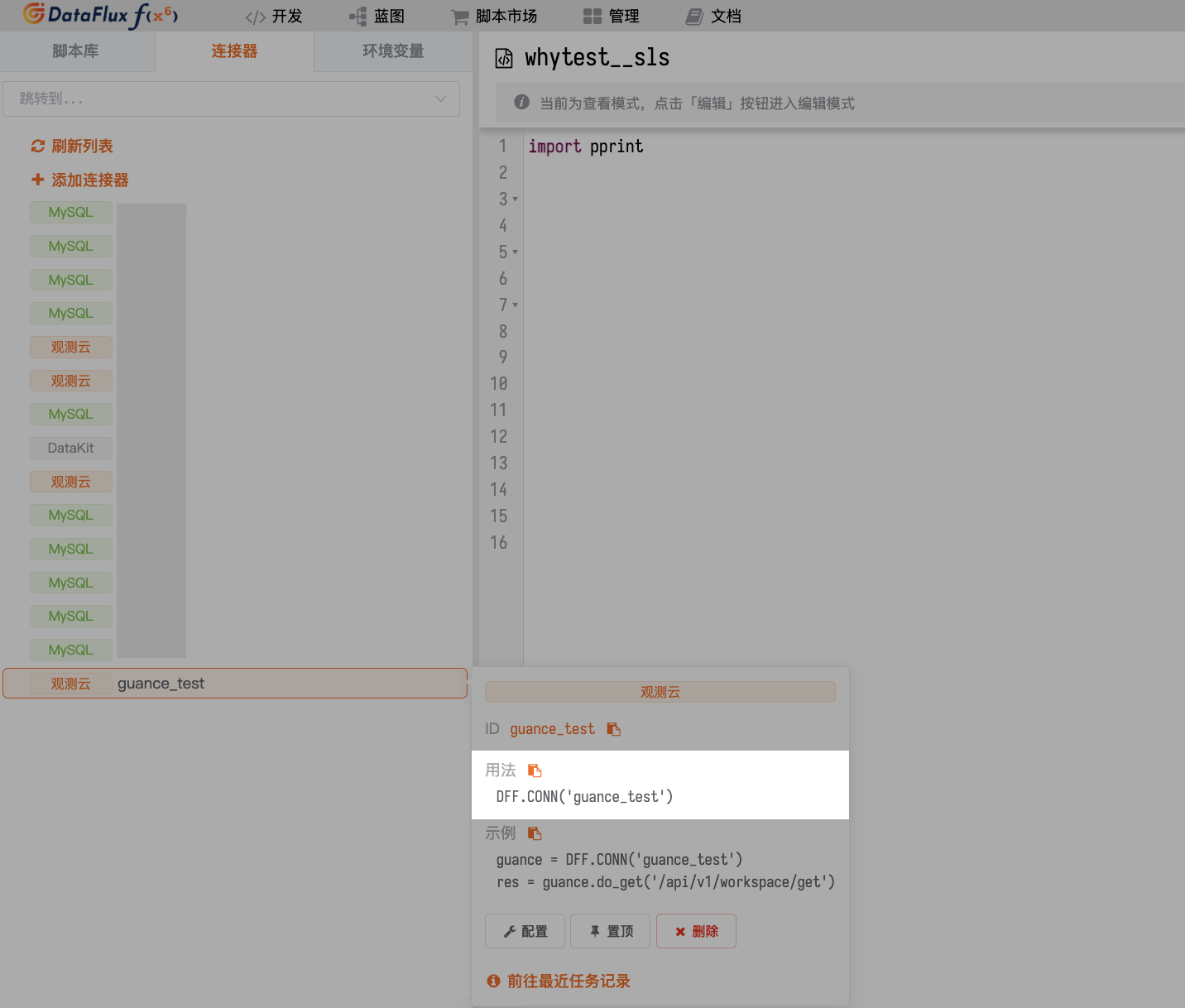

Connecting Func withGuance¶

Connectors help developers connect toGuance systems.

Go to Development > Connectors > Add Connector page:

- Select connector type;

- Customize the connector's ID, e.g.,

guance_test; - Add a title. This title will be displayed synchronously within theGuance workspace;

- Enter a description for the connector if needed;

- SelectGuancenode;

- Add API Key ID and API Key;

- Test connectivity if needed;

- Save.

After association, you can query data sources via the following two methods on the Func platform:

How to Get an API Key¶

- Go toGuance Workspace > Management > API Key Management;

- Click Create Key on the right side of the page;

- Enter a name;

- Click OK. The system will automatically create an API Key for you; you can view it in API Key.

For more details, refer to API Key Management.

Using Connectors¶

After normally adding a connector, you can use the connector ID in scripts to get the operation object of the corresponding connector.

Take the connector with ID guance_test mentioned above as an example, the code to get its operation object would be:

Writing Scripts Manually¶

Besides using connectors, you can also write your own functions to perform data queries.

Assume that a user has correctly created a MySQL connector (with ID defined as mysql) and there exists a table named my_table containing the following data:

id |

userId |

username |

reqMethod |

reqRoute |

reqCost |

createTime |

|---|---|---|---|---|---|---|

| 1 | u-001 | admin | POST | /api/v1/scripts/:id/do/modify | 23 | 1730840906 |

| 2 | u-002 | admin | POST | /api/v1/scripts/:id/do/publish | 99 | 1730840906 |

| 3 | u-003 | zhang3 | POST | /api/v1/scripts/:id/do/publish | 3941 | 1730863223 |

| 4 | u-004 | zhang3 | POST | /api/v1/scripts/:id/do/publish | 159 | 1730863244 |

| 5 | u-005 | li4 | POST | /api/v1/scripts/:id/do/publish | 44 | 1730863335 |

| ... |

Assuming that at this point you need to query this table's data through a data query function, and the field extraction rules are as follows:

| Original Field | Extracted As |

|---|---|

createTime |

Time time |

reqCost |

Column req_cost |

reqMethod |

Column req_method |

reqRoute |

Column req_route |

userId |

Tag user_id |

username |

Tag username |

Then, the full reference code is as follows:

- Example of a data query function

import json

@DFF.API('Query data from my_table', category='dataPlatform.dataQueryFunc')

def query_from_my_table(time_range):

# Get the operation object of the connector

mysql = DFF.CONN('mysql')

# MySQL query statement

sql = '''

SELECT

createTime, userId, username, reqMethod, reqRoute, reqCost

FROM

my_table

WHERE

createTime > ?

AND createTime < ?

LIMIT 5

'''

# Since the unit of time_range passed in is milliseconds

# but the unit of createTime field in MySQL is seconds, conversion is required

sql_params = [

int(time_range[0] / 1000),

int(time_range[1] / 1000),

]

# Execute query

db_res = mysql.query(sql, sql_params)

# Convert to DQL-like return result

# Depending on different tags, multiple data series might need to be generated

# Use serialized tags as keys to create a mapping table

series_map = {}

# Traverse original data, convert structure and store in mapping table

for d in db_res:

# Collect tags

tags = {

'user_id' : d.get('userId'),

'username': d.get('username'),

}

# Serialize tags (tag keys need to be sorted to ensure consistent output)

tags_dump = json.dumps(tags, sort_keys=True, ensure_ascii=True)

# If the data series for this tag hasn't been established yet, create one

if tags_dump not in series_map:

# Basic structure of data series

series_map[tags_dump] = {

'columns': ['time', 'req_cost', 'req_method', 'req_route'], # Columns (first column fixed as time)

'tags' : tags, # Tags

'values' : [], # Value list

}

# Extract time and columns, then append value

series = series_map[tags_dump]

value = [

d.get('createTime') * 1000, # Time (output unit needs to be in milliseconds, adjust accordingly here)

d.get('reqCost'), # Column req_cost

d.get('reqMethod'), # Column req_method

d.get('reqRoute'), # Column req_route

]

series['values'].append(value)

# Add outer DQL structure

dql_like_res = {

# Data series

'series': [ list(series_map.values()) ] # Note that an extra array layer is needed here

}

return dql_like_res

If you only want to understand the data transformation process and don't care about the querying process (or temporarily don't have an actual database to query), you can refer to the following code:

- Example of a data query function (without MySQL query part)

import json

@DFF.API('Query data from somewhere', category='dataPlatform.dataQueryFunc')

def query_from_somewhere(time_range):

# Assume raw data has already been obtained through some means

db_res = [

{'createTime': 1730840906, 'reqCost': 23, 'reqMethod': 'POST', 'reqRoute': '/api/v1/scripts/:id/do/modify', 'username': 'admin', 'userId': 'u-001'},

{'createTime': 1730840906, 'reqCost': 99, 'reqMethod': 'POST', 'reqRoute': '/api/v1/scripts/:id/do/publish', 'username': 'admin', 'userId': 'u-001'},

{'createTime': 1730863223, 'reqCost': 3941, 'reqMethod': 'POST', 'reqRoute': '/api/v1/scripts/:id/do/publish', 'username': 'zhang3', 'userId': 'u-002'},

{'createTime': 1730863244, 'reqCost': 159, 'reqMethod': 'POST', 'reqRoute': '/api/v1/scripts/:id/do/publish', 'username': 'zhang3', 'userId': 'u-002'},

{'createTime': 1730863335, 'reqCost': 44, 'reqMethod': 'POST', 'reqRoute': '/api/v1/scripts/:id/do/publish', 'username': 'li4', 'userId': 'u-003'}

]

# Convert to DQL-like return result

# Depending on different tags, multiple data series might need to be generated

# Use serialized tags as keys to create a mapping table

series_map = {}

# Traverse original data, convert structure and store in mapping table

for d in db_res:

# Collect tags

tags = {

'user_id' : d.get('userId'),

'username': d.get('username'),

}

# Serialize tags (tag keys need to be sorted to ensure consistent output)

tags_dump = json.dumps(tags, sort_keys=True, ensure_ascii=True)

# If the data series for this tag hasn't been established yet, create one

if tags_dump not in series_map:

# Basic structure of data series

series_map[tags_dump] = {

'columns': ['time', 'req_cost', 'req_method', 'req_route'], # Columns (first column fixed as time)

'tags' : tags, # Tags

'values' : [], # Value list

}

# Extract time and columns, then append value

series = series_map[tags_dump]

value = [

d.get('createTime') * 1000, # Time (output unit needs to be in milliseconds, adjust accordingly here)

d.get('reqCost'), # Column req_cost

d.get('reqMethod'), # Column req_method

d.get('reqRoute'), # Column req_route

]

series['values'].append(value)

# Add outer DQL structure

dql_like_res = {

# Data series

'series': [ list(series_map.values()) ] # Note that an extra array layer is needed here

}

return dql_like_res

- Example Return Result

{

"series": [

[

{

"columns": ["time", "req_cost", "req_method", "req_route"],

"tags": {"user_id": "u-001", "username": "admin"},

"values": [

[1730840906000, 23, "POST", "/api/v1/scripts/:id/do/modify" ],

[1730840906000, 99, "POST", "/api/v1/scripts/:id/do/publish"]

]

},

{

"columns": ["time", "req_cost", "req_method", "req_route"],

"tags": {"user_id": "u-002", "username": "zhang3"},

"values": [

[1730863223000, 3941, "POST", "/api/v1/scripts/:id/do/publish"],

[1730863244000, 159, "POST", "/api/v1/scripts/:id/do/publish"]

]

},

{

"columns": ["time", "req_cost", "req_method", "req_route"],

"tags": {"user_id": "u-003", "username": "li4"},

"values": [

[1730863335000, 44, "POST", "/api/v1/scripts/:id/do/publish"]

]

}

]

]

}

Management List¶

All connected data sources can be found under Integrations > External Data Sources > Connected Data Sources.

In the list, you can perform the following actions:

- View data source type, ID, status, creation and update information;

- Edit a data source, modifying configurations except for DataFlux Func, data source type, and ID;

- Delete a data source.

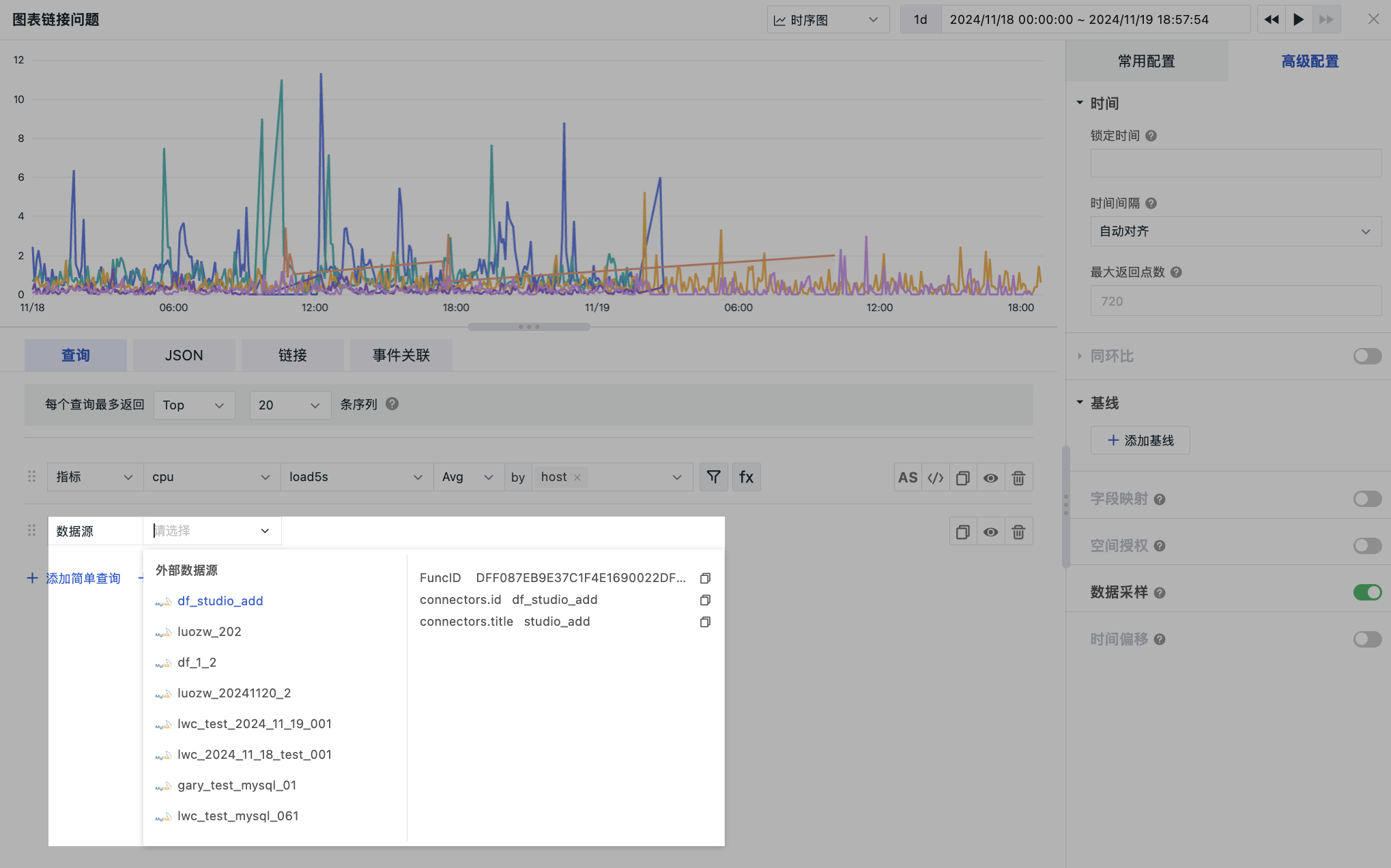

Application Scenarios¶

InGuance, one typical scenario for using external data sources for querying is charts > chart queries.

| Data Return for Different Charts | ||

|---|---|---|

| Line Chart | Pie Chart | Table Chart |